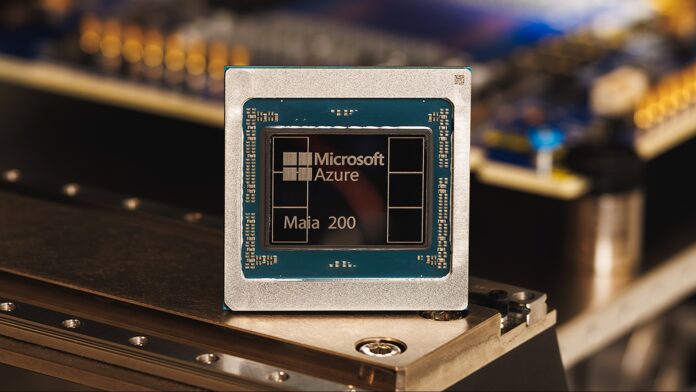

Microsoft has unveiled its Maia 200 accelerator chip, asserting it delivers three times the performance of competitors’ hardware from Google and Amazon. This isn’t just a marginal improvement; it signals a shift in the AI hardware landscape, particularly in the crucial area of inference – the process of using trained AI models to make predictions and generate outputs.

The Rise of Specialized AI Hardware

For years, companies have relied on general-purpose processors (CPUs) and graphics cards (GPUs) to power AI. However, as models grow larger and more complex, specialized AI chips like Maia 200 are becoming essential. These chips are designed from the ground up to accelerate AI tasks, offering significant gains in speed and efficiency.

The Maia 200 achieves over 10 petaflops of performance (10 quadrillion floating-point operations per second), a metric typically reserved for the world’s most powerful supercomputers. This is enabled by using a highly compressed “4-bit precision (FP4)” data representation, which sacrifices some accuracy for massive speed gains. The chip also delivers 5 PFLOPS in the slightly less compressed 8-bit precision (FP8).

Microsoft’s Internal Advantage… For Now

Currently, Microsoft is deploying Maia 200 exclusively within its own Azure cloud infrastructure. It’s being used to generate synthetic data, refine next-generation large language models (LLMs), and power AI services like Microsoft Foundry and Copilot. This gives Microsoft a substantial edge in providing advanced AI capabilities through its cloud platform.

However, the company has indicated that wider customer availability is coming, suggesting that other organizations will soon be able to access Maia 200’s power via Azure. Whether the chips will eventually be sold standalone remains to be seen.

Why This Matters: Efficiency and Cost

The Maia 200 isn’t just about raw speed. Microsoft claims it delivers 30% better performance per dollar than existing systems, thanks to its fabrication using TSMC’s cutting-edge 3-nanometer process. With 100 billion transistors packed onto each chip, it’s a significant leap in density and efficiency.

This cost-effectiveness is crucial because training and running large AI models is incredibly expensive. Better hardware means lower operational costs, making AI more accessible and sustainable.

Implications for Developers and End Users

While everyday users won’t immediately notice a difference, the underlying performance boost will eventually translate into faster response times and more advanced features in AI-powered tools like Copilot. Developers and scientists using Azure OpenAI will also benefit from improved throughput and speeds, accelerating research and development in areas such as weather modeling and advanced simulations.

The Maia 200 represents a strategic investment by Microsoft, positioning them as a leader in the next generation of AI infrastructure. While it’s currently a closed ecosystem, the potential for wider availability suggests that this could reshape the competitive landscape in the cloud computing market.

In conclusion, Microsoft’s Maia 200 chip is not just another piece of hardware; it’s a testament to the growing importance of specialized AI acceleration and a clear indicator of where the industry is heading.